This set of blog posts is part of the book/course on Data Science for the Internet of Things. We welcome your comments at jjb at datagraphy dot io. I have been a founding member of the Data Science for Internet of Things Course. The current batch of the course is closed but a new course we are working on at the University of Oxford is awaiting applications.

You can find the first post describing the initial steps of the methodology in the previous post here and on my DSC here.

This new post focus on continuous improvement, following the design and deployment of the architecture and the model.

Continuous improvement

The importance of continuous improvement in the methodology is due to several categories of factors.

The first category of factors is the fast evolving nature of both data science and IoT. There are new algorithms, techniques and tools that are being developed and it is interesting to capitalize on those evolutions.

The second category is the problem itself. The problem solved might also evolve, therefore, the model that has been build to solve it will need to be reviewed and adjusted.

The third category is due to the nature of the IoT devices. As explained in the book Enterprise IoT[1], network devices (the things of IoT) can be upgraded through software regularly. While hardware is unchanged for a certain period of time, software gets updated often, bringing new capabilities to the devices, which can be used to solve the problem defined initially.

The fourth category is about the way the methodology was implemented initially. If it has been implemented in a waterfall way, then the model can be considered as providing a thorough solution, but will still need to be reviewed regularly. However, it the methodology has been implemented in an agile way, then the initial implementation is only a first step good enough, but definitely not the last step of implementation.

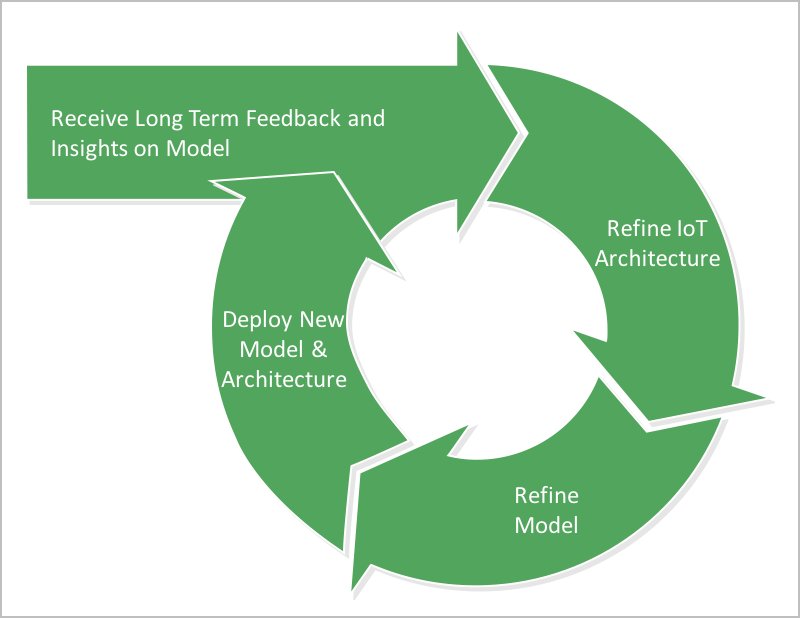

This continuous improvement phase is split into four activities:

- Get feedback and insights on model

- Refine IoT architecture

- Refine model

- Deploy new model and architecture

This phase is represented in the following figure. It is a very liberal adaptation of the Deming[2] Wheel (Plan - Do - Check - Act).

Given the categories of factors listed above, the rate of review to conduct the improvement activities is very specific to those factors. For instance, if the methodology has been implemented in an agile way the rate of review should be really fast, since we are more talking about iterations in the agile process than real continuous improvement.

Detailed description of activities

Feedback and insights

Whether we decide to revise the model on a regular period or when a given event occurs (often an unexpected result / behavior), we need to collect information on the current state. In the context of data science for IoT, this will mean collecting data on the output (i.e. the results of the predictions made by the model), the input (the data used by the model) and the real outcome (i.e. real life, not the model results).

For instance, if we are looking at an industrial analytics application that predicts pressure based on a set of diverse parameters coming from sensors, we want to collect the time series of all the data coming from the sensors, the time series of the actual pressure points and the predicted time series by the model we initially designed.

Whatever the rationale behind the continuous improvement process, collecting this data ensures that we have a sound foundation for reviewing the work done previously, and construct a new dataset.

Simple exploratory analysis with this newly formed dataset might be useful to lead to some clues as to the current performance of the model.

In addition to this collection, it is important to collect information from the users of the model, they may have additional elements to add to the process. For instance, their use of the model may have changed, or the business environment they are evolving within may be more dynamic than initially assumed.

Then, as explained above, there may be new elements to take into consideration with regards to algorithms, techniques and tools. For instance, we can cite (not exhaustive):

- Enhanced algorithms to predict rare events (i.e. imbalanced classes with little data)

- Perception based computing and its role in dynamically complex sensory environments

- Reinforcement learning (see Ajit’s post on KDnuggets)

- New techniques for solving non convex optimization algorithms (see this post)

Refine IoT Architecture

After the collection of inputs and data, we apply a similar sequence as the one described in the previous post:

- We look at the IoT architecture;

- Then, we look at the model.

The idea of first looking at the architecture is that the architecture defines the possibilities in terms of data available.

The two drivers for changing the architecture are:

- Additional data available from the devices

- Additional tools for processing

In the first case, this will depend on whether the devices have been updated to provide additional services and data streams. For instance a device may have sensors that were not initially programmed in its firmware, like an industrial device initially providing only temperature information, which is upgraded to provide pressure in addition of temperature. Also, the devices could now be able to handle some of the computation by themselves, which they were not able to do initially (i.e. like on a smartphone, adding or updating an application). In all cases, this will mean that new data will be available for use in the model.

In the second case, we may want to change the architecture to adjust or add processing. For instance, the initial architecture may do stream processing directly through an Apache Spark engine, and we may need or want to change the architecture to have edge processing with the addition of an edge device to do so. This could also be that new tools are available to process the data in a completely different way.

In the previous step, one of the element was to collect a new dataset for further analysis. In this phase, we may have added data streams from the devices or from the edge. So, due to this, it might be necessary to refine or rebuild the dataset using this new data.

The implications of changing the architecture can be far reaching, and doing so should be done with care, otherwise we may not be doing continuous improvement but we have to start from scratch instead. The level of effort implied by the change of architecture should be assessed to ensure that it stays manageable. Otherwise, it might be better to start from scratch (i.e. defining the problem).

Refine model

When the architecture has been reviewed and adjusted, then the model needs to be reviewed to take into consideration the potential changes:

- Change in business environment (i.e. the problem we were trying to solve initially has changed)

- There is a shift in the accuracy of the model (it does not work as well as it used to work initially)

- New data available (through the previous step, we got additional data)

- New algorithmic method available for solving (and we believe it might make the model more efficient)

This list is by no means exhaustive, but for all these reasons we need to re-analyze the problem at hand and rework on the model, using the new dataset that has been created (either in the first phase or in the second phase depending on whether we have additional data in the data streams).

Using this dataset, the same activities as the ones described in the previous post are used:

- Design model to solve the problem

- Evaluate the model

- Deploy the model and the architecture

We won’t go through again here, just reminding that the model should be assess both from a statistical point of view (minimizing bias and variance and trading-off precision and recall) and that it can indeed solve the problem (impact on the KPIs we have defined).

For this second case (solving the problem), the KPIs may have changed both in terms of target and in terms of which KPIs to address.

In summary

This phase is about improving the model and the architecture designed initially, whether it was conceived as a finished project or as a first step in an iterative way of working (i.e. Agile).

However it is not designed at replacing the previously build model and architecture, only at evolving and improving it. So care should be taken as to what type of changes are introduced, specifically in the architecture.

Depending on the context, there are several elements that can drive the evolution, we noted several, such as:

- change in the performance of the current architecture and model

- additional needs from users (this element is key if following an agile methodology)

- new tools, techniques and algorithms available that may help to solve the problem in a cheaper or faster way

- additional data streams available from the IoT devices thanks to software update

In upcoming posts, we will explore the different phases in more details and look at potential deliverables for each of them.

To conclude on this post, we welcome your comments at jjb at datagraphy dot io. Please visit the University of Oxford Continuing Education website and / or email me or Ajit at ajit dot jaokar at futuretext dot com if you are interested in joining the course.